Week 6 - Areas for Prototyping

We’re exploring the question: How might tech support communities to carry out collective decision-making and collective design?

Short version:

- Bea wrote some thoughts on the role of collective intelligence in grantmaking

- We finished listening to interviews and completed the affinity-mapping exercise.

- We made a circular journey map

- At Show & Tell #3 we pitched three areas for prototyping

Longer version:

What is the role of collective intelligence in grantmaking? (Bea)

Recap! Bea’s exploring these questions:

- What is the role of collective intelligence in grantmaking?

- When human intelligence is combined with machine intelligence, how and where might this be useful in grantmaking?

- What other kinds of ‘intelligences’ or ways of knowing or intuiting could communities draw on when imagining, decision-making, deliberating etc.?

- If ‘collective intelligence’ was to grow into ‘collective wisdom’ how might that be developed during a grantmaking process?

- Are there any examples out there of work that’s drawing on the collective intelligence / wisdom of communities and combining it with data/machine intelligence?

She’s been deep in the material for the last two weeks, traversing diverse subjects from group behaviour to feminist cartography and phenomenology to collective wisdom. She’s also been exploring virtual environments and, of course, drawing on our user research.

After going broad with her research, Bea’s narrowed down to four ideas to inform the next part of the work:

- Collective intelligence is more powerful than individual intelligence

- Lived and embodied experience is as valuable as academic/practice based expertise

- Group behaviour is more important than the knowledge or experience held by individuals in a group

- What’s measured (by funders) isn’t always what’s important

Written down like this, these might seem like obvious points. The important thing is that most contemporary grantmaking processes and behaviours aren’t designed to support these ideas. This has led Bea to the following questions:

How might grantmaking support collective intelligence over individual intelligence?

e.g Most existing grant application processes reduce the application form to a one-to-one or few-to-few experience. Is there a way to encourage effective collaboration as part of this process?

How might collective intelligence help grantmakers listen more effectively to lived experience?

e.g. Aggregating case studies into qualitative databases of experiences, stories or hopes so they can be analysed and visualised in new ways.

How might grantmakers value a group’s behaviours as much as their individual qualifications?

e.g. Rather than asking for applicants’ CVs or profiles, why not focus on ways to understand how effectively the team works together?

How might collective intelligence help grantmakers get better at seeing what’s important?

e.g. What can (distributed) groups say/show about an issue or place beyond traditional measures and outcomes data?

These questions have potentially huge implications for the standard grantmaking process and the skills grantmaking organisations need. They start to prioritise relationships over project plans and embodied knowledge alongside hard data. They hint at requiring funders to have skills in behavioral psychology, facilitation, data science, coding and machine learning. They start to invest in community groups through developing collaboration skills and new types of knowledge, as well as money. And they can help communities reframe and tell their own stories about what is happening in a place or around an issue.

We might be looking at asking applicants to make ‘feral maps’ of their communities as a way to build shared understanding beyond data. We’d need to find ways to accommodate radical learning and changes of direction within funded projects. What if grant assessors were experts in team facilitation and behaviour rather than reading word documents? How can funders make the most of new datasets they’re gathering, for example from aggregated lived experience?

Finished affinity mapping (Ian)

This week we finished grouping the problems, opportunities and insights we’d gleaned from our user-research interviews.

We’ve now given these groups headings and discussed the relationships between them. You can see them in their raw state here.

Next week we’ll write them up more coherently and separately from these weeknotes. This user-research will sit alongside the journey-maps we’ve also been working on.

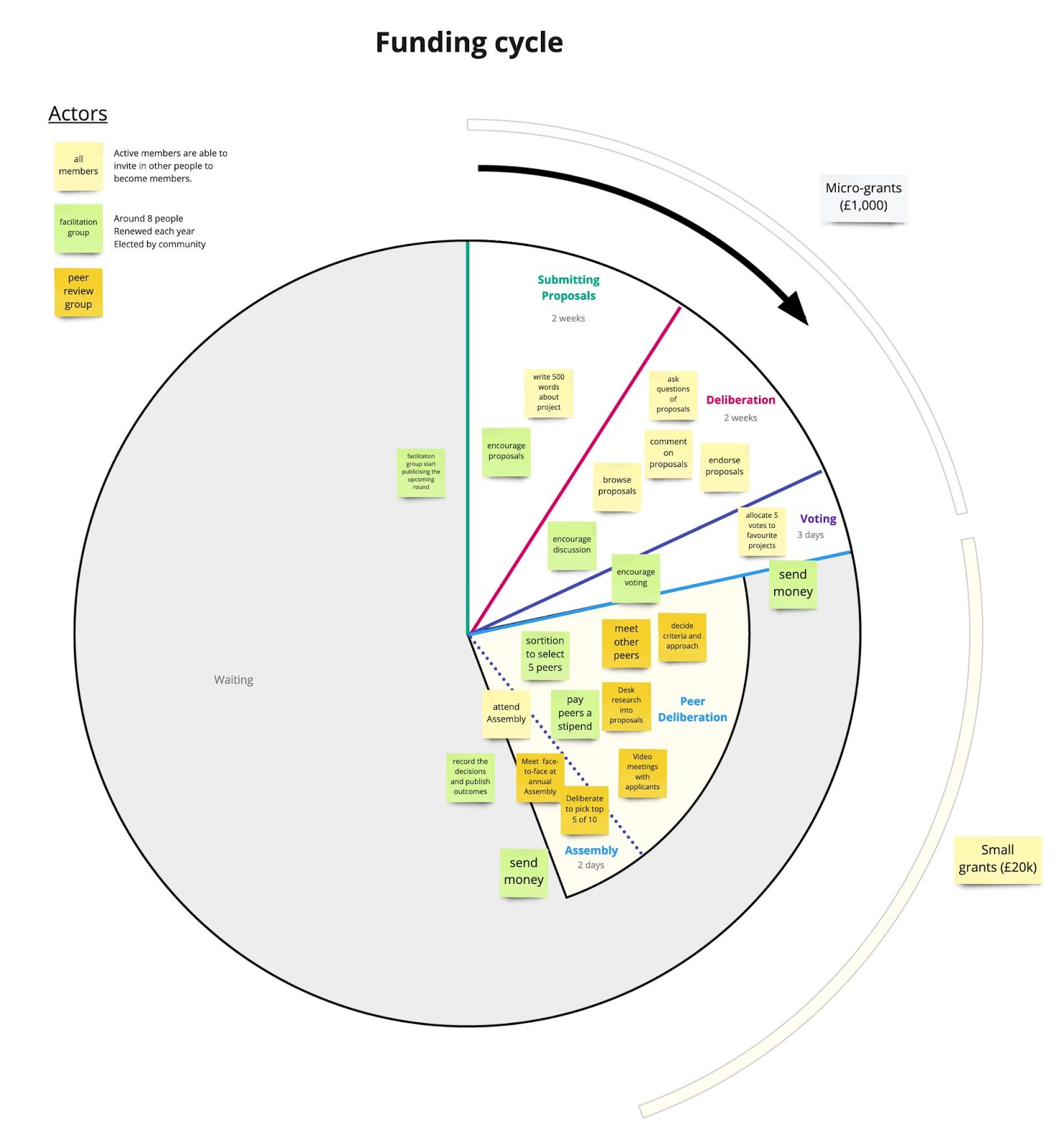

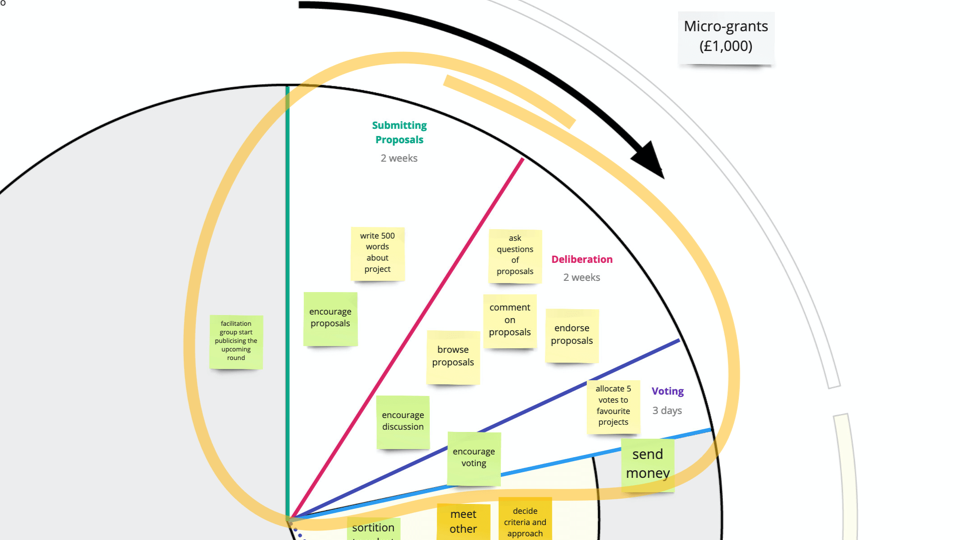

Made a circular journey map (Ian)

Back in week 4 we began to create a journey map of how a funder might test participatory grant-making (PGM). This was an idealised map, based on our interviews with Khadra from Camden Giving and Samuel from The Other Foundation.

Then we spoke with Isis from Edge Fund and Rose from Fundaction. The way these two organisations were doing PGM felt significantly different.

- They are membership organisations where any member can apply for a grant

- The funds were created by a group of foundations and activists as equals.

- There aren’t ‘staff’ running the process - rather a ‘facilitation group’ of elected members supports the funding cycles

We thought that what we were learning was worth mapping, but this time wanted to try and map it as a circle. This felt more representative of the cycles that PGM goes through.

This is our first draft:

You can also take a closer look on Miro. It’s a little raw right now. And probably too early to be hitting publish. We’ll turn this into a stand-alone journey-map next week with annotations to help you understand how to read it.

Areas for prototyping (Paul)

Having spent the last two four deep in interviews, we felt were were in a good position to pitch some ideas of areas for prototyping at our Show and Tell.

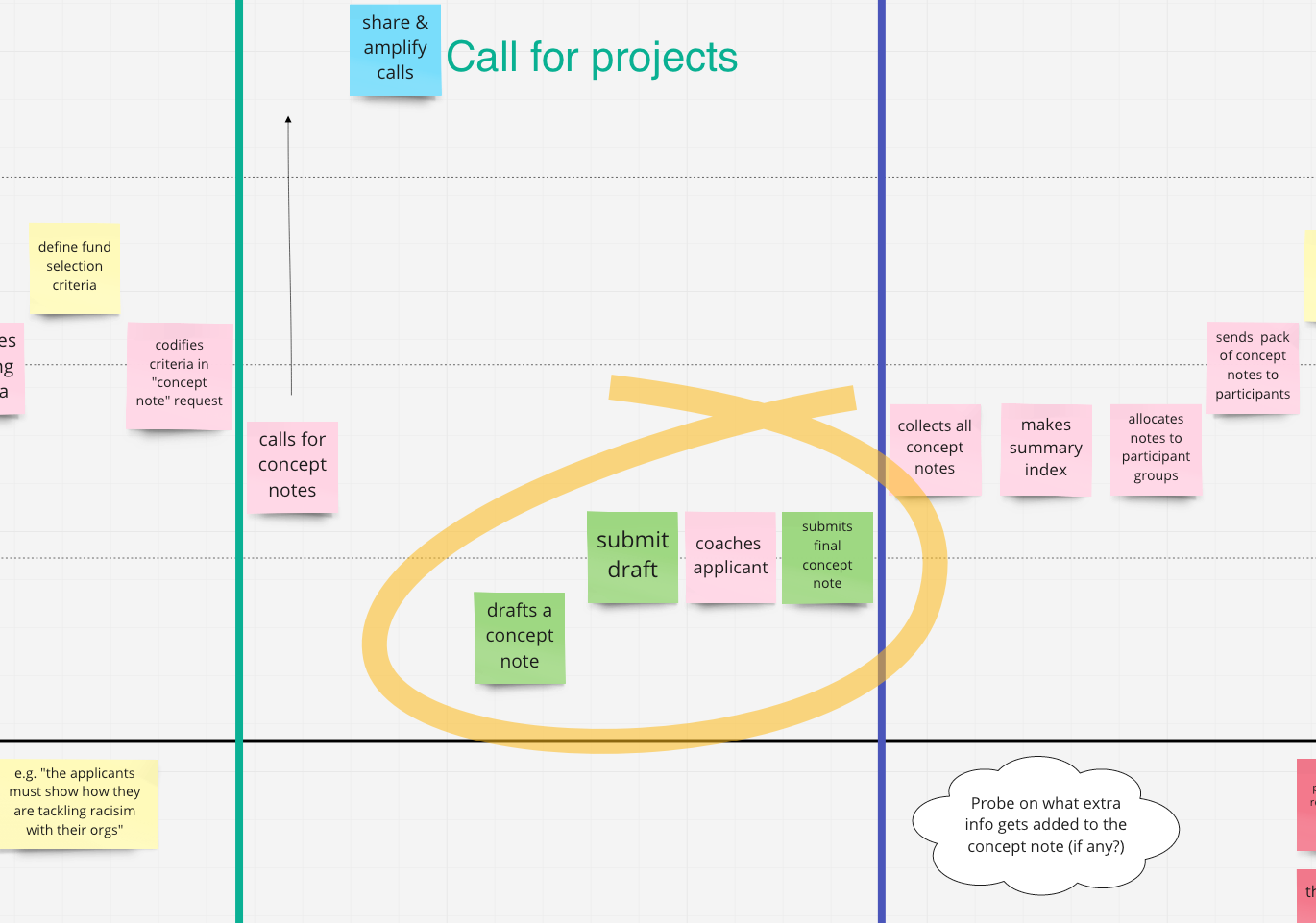

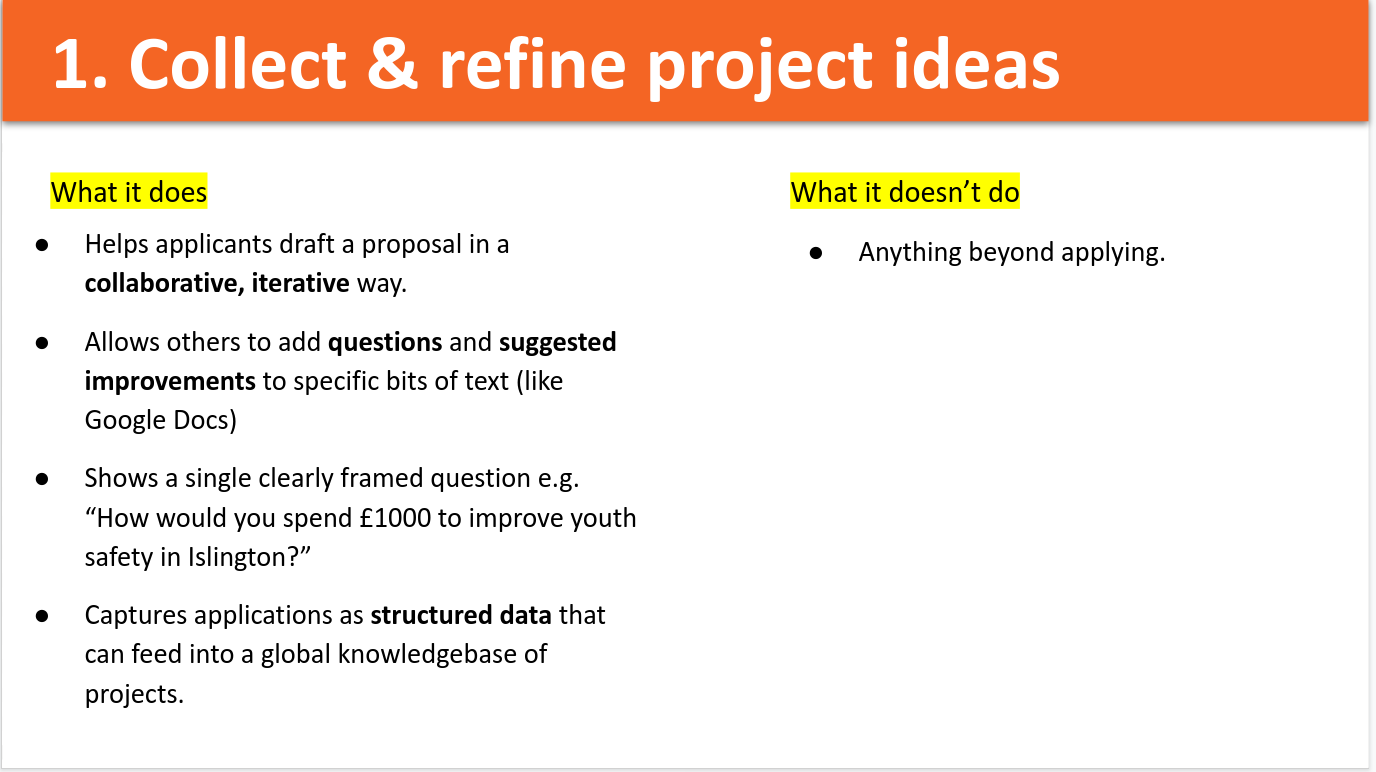

The first area is collecting ideas.

From our research, we identified a number of issues in the area of calling for applications:

Issue: Poor use of tech

Some funds ask applicants to download a Microsoft Word file, type their words into it, save it and send it back by email. This has a number of problems:

- It requires a computer.

- It requires proprietary software (Microsoft Office)

- It often results in huge files (because someone copy-pasted words AND format from their org’s website) - resulting in problems downstream

- Humans must manually take the words out of the Word file and put them somewhere else.

Issue: Applications are treated as fire and forget

… rather than a living document that goes through iterations and evolves over a period of time.

We heard about fund staff giving support on applications - by emailing Word documents back and forth - not an ideal experience!

Even better practice like using Typeform assumes that applications are “done” once and don’t go through any cycles of iteration.

Issue: applications are treated as an individual task

… rather than a collective one.

We know that in organisations, several people have input to an application, not a single person. The same is true for individuals applying: the person doing a project may not be good at writing applications and so get help from others.

So why do funds treat applications as a task that a single person is involved in (submitting an application form) rather than a collaborative, living document?

Issue: Applications are too complicated

They have too many questions. Sometimes they ask for too much upfront (documents required for due diligence being requested before the concept has even been accepted.)

Prototype idea 1

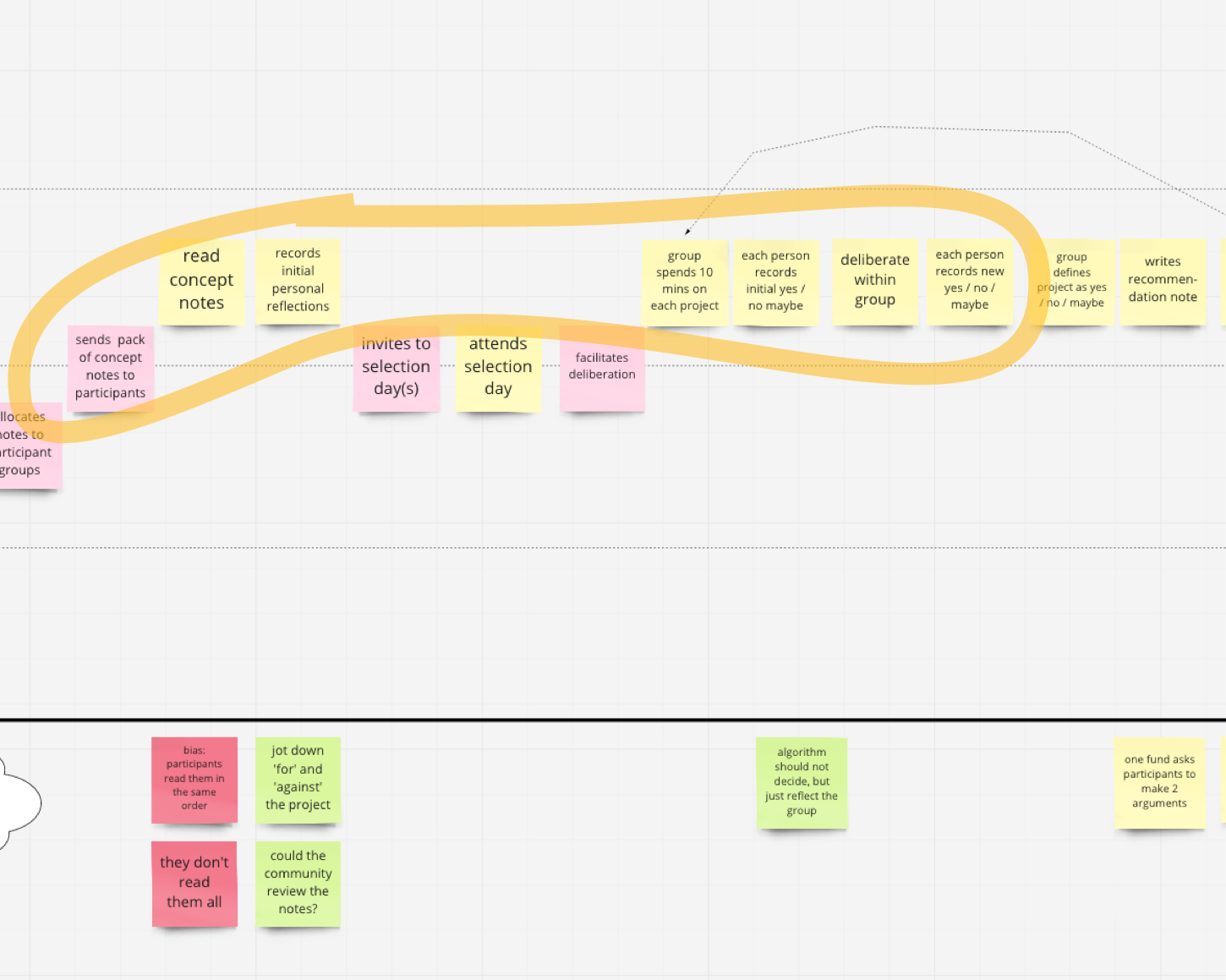

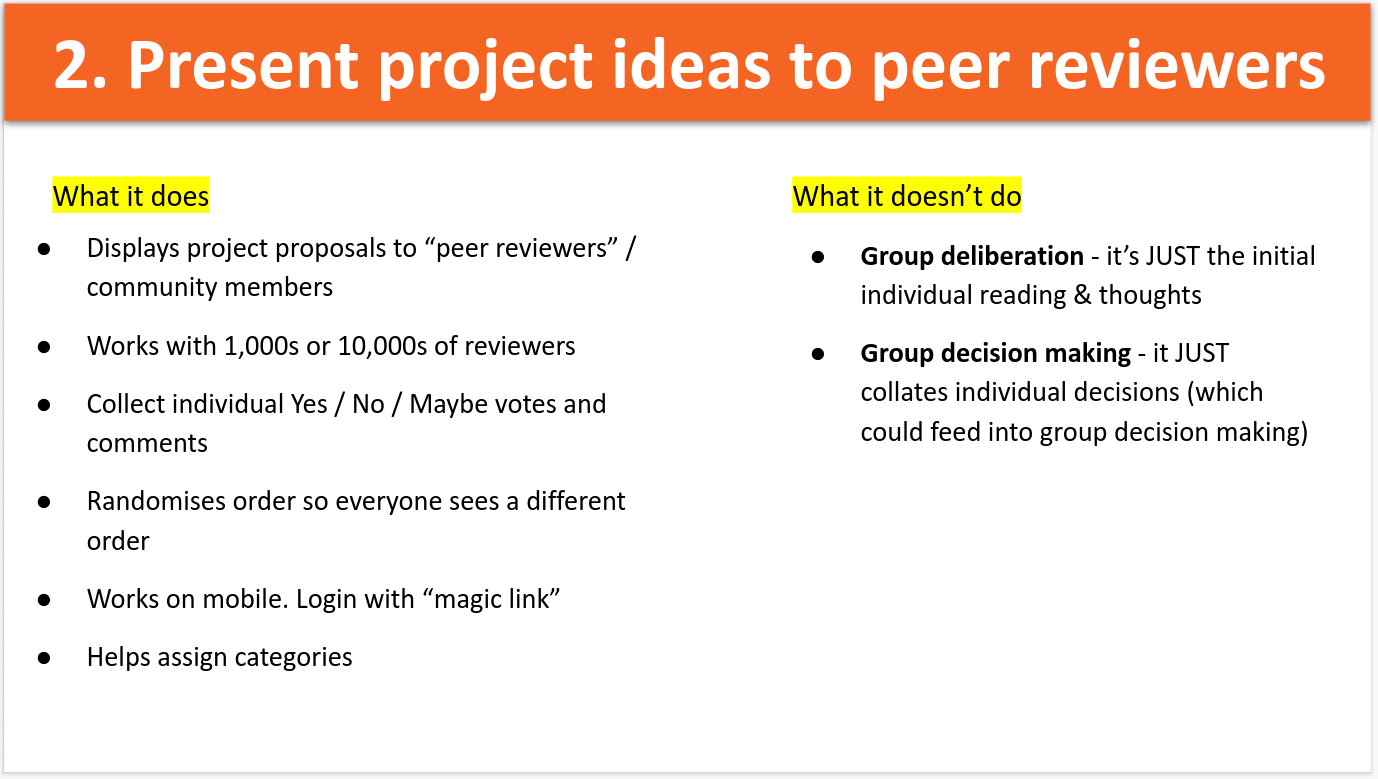

The second area is reviewing ideas.

Issue: sending a bunch of Word files to peer reviewers

… and asking them to review ahead of time.

That’s not convenient for a couple of reasons - word files cannot be reviewed on a phone, so the reviewer would need to specifically switch to using a computer.

Worse, they can’t be reviewed piecemeal - say 10 minutes at a time - but rather would require a big chunk of dedicated time.

Issue: Most people haven’t read all the applications before the meeting

…probably for several reasons, but likely to do the inconvenience described above.

Issue: Applications are read in the same order by everyone

That introduces bias - like the well-studied case of Judges giving harsher sentences before lunchtime - perhaps reviewers are more (or less) favourable to the first applications they read.

Worse, if most people don’t read all the applications, the same applications are going to have been missed by every peer reviewer.

Issue: Funds send guidance & summaries as well the applications

Summaries by fund staff are an opportunity to introduce bias. Sending guidance notes as a separate thing (e.g. email attachment) is inconvenient for the reviewer, especially on a phone - imagine trying to switch between a spreadsheet and a Word doc on your phone - even if your phone could open either!

Prototype idea 2

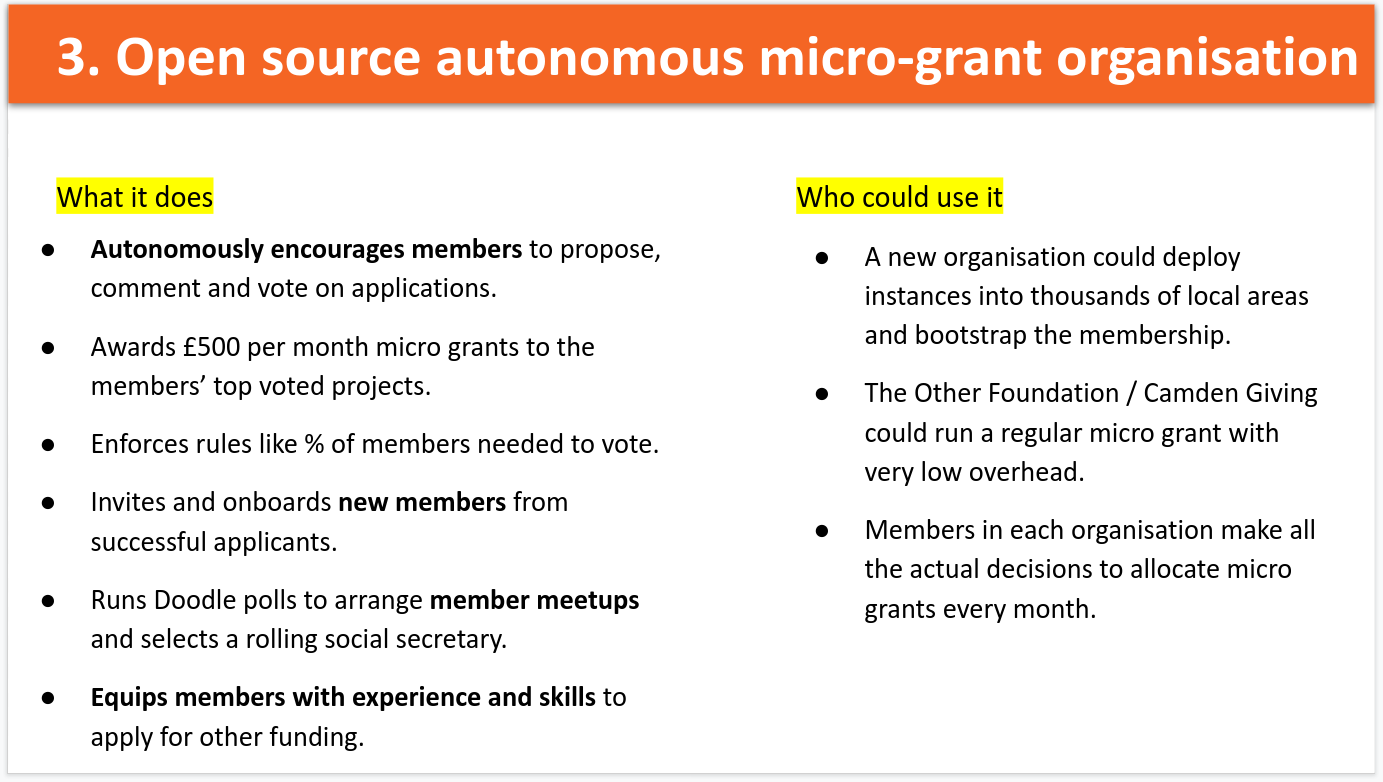

The third area is the first part of the circular journey map:

Prototype idea 3

Why we think it’s a good area to prototype:

- It can demonstrate slick user experience and encompass the first two prototype areas.

- It’s provocative: it shows the extreme of removing staff and their bias.

- It can demonstrate radical transparency because all data and decisions are structured. It can state “this project was funded on this day, and was voted on by these people, with these results”.

- It demonstrates how tech could achieve HUGE scale by removing humans. What if the Lottery used it to grant fund every postcode, every month?

- It’s not unrealistic: think about Meetup.com. Every month it encourages organisers to host a new event, it goes out and finds possible attendees, it takes payments, it gets their RSVP, it collects feedback afterwards.

Next week

We’ll finish the research synthesis by taking the themed areas from our Miro board and writing up a bunch of statements about what we learned. We’ll publish those here along with smarted-up journey maps.